Boeing 737 Max Issues are a Preview of Driverless Liability Issues to Come

/One of the best books I’ve ever read was Joe Sutter’s history of development of the Boeing 747. Sutter was lead engineer of Boeing’s 747 project. His book tells the long and detailed story of how Boeing conceptualized, designed, built and marketed the 747.

Part of the book describes development of Boeing’s original 737, a much smaller but equally important aircraft that came of age in the same era as the 747. Since reading the book, I’ve always felt an extra level of comfort when boarding a Boeing aircraft, knowing just how much work goes into making an aircraft airworthy. So I’ve paid especially close attention to the recent crashes of Lion Air and Ethiopian Airlines’ modern 737 Max jets.

Everyone agrees the crashes were tragic and preventable. But there are some disagreements on the root cause, which is why I am writing this article. I believe the Boeing 737 Max crashes are prelude to legal arguments that will unfold over and over in a world increasingly controlled by automation.

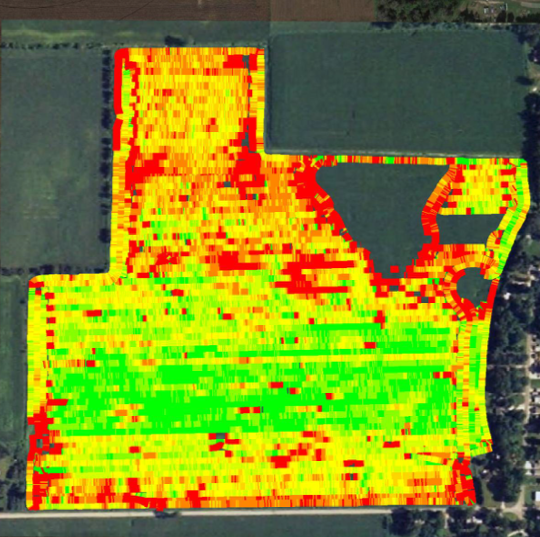

When I am asked about the legal concerns with autonomous and robotic farm equipment, I always respond that the main concern is liability. Who is responsible when something goes wrong? The 737 Max crashes show—with automation—sorting out liability is more challenging. There are three potential causes of the crashes:

First, Boeing’s software. The planes have sophisticated MCAS software that is designed to help the pilots by pushing the nose of the plane down when all sensors indicate a stall is imminent (when the plane is climbing too rapidly). Many have claimed the MCAS software malfunctioned. Boeing is issuing a patch for the software as we speak, suggesting that MCAS was likely programmed to aggressively.

Second, the Angle of Attack sensor manufacturer. It appears that both planes that crashed had faulty sensors that fed the planes’ MCAS software inaccurate data. As a result, MCAS understood a stall was occuring when no such conditions existed.

Finally, the human element. Investigation revealed that Lion Air should never have taken off. Pilots on earlier flights knew the Angle of Attack sensors were faulty, but they were not fixed. Pilots could have overridden the MCAS system, but we believe they did not. (We still don't know about the Ethiopian Airlines).

So what was the cause? Software, hardware, or human errors? It will take years for the courts to sort this out. Vox (and others) have suggested the cause was that Boeing used software programming as shortcut to redesigning hardware, or retraining pilots on the Max’s flying characteristics.

Tagging the software developer with liability, even when it is their fault, is not necessarily simple. Software developers often build indemnity agreements into their product licenses, disclaiming all liability for programming errors and requiring users to indemnify the developers for things that go wrong. I do not know if buying a new 737 Max comes with such a clause, but I’m sure it comes with a licensing agreement for the plane’s software.

That is why this discussion has implications for driverless farm equipment too. Should a manufacturer be allowed to push liability to the farmer for damages that are caused by their equipment, even if the root cause is a software glitch and not the “operator”? Some manufacturers will try, for certain.

I still feel safe boarding a 737, knowing the original design dates back to the Joe Sutter era and has been flying safely for 50+ years. The software issues will be fixed quickly, no doubt, but the legal issues will linger on for years.